Common spreadsheet mistakes in risk modeling

Quantitative risk analysis

involves a wide range of skills and tasks that risk modelers need to have

mastered before embarking on an important risk analysis of their own.

Among the most over-looked and underestimated of these skills is knowledge

of how to manipulate variables within Monte Carlo simulation models which

is the standard modeling technique for risk analysis.

Add, subtract, multiply, divide – we have learned how to use them with numbers at school by the age of ten at the latest, and we take it for granted that we have mastered them. It is hard to imagine a risk analysis model in any field that does not include some of these four operations. Yet these basic operations very often do not work in the same way when those numbers are uncertain. Most risk analysis models to some degree incorrectly manipulate uncertain variables in a model, perhaps because we don’t give a second thought to calculations using + - * / .

There is a widely held belief that one can take a standard spreadsheet model (e.g. a cashflow model with EBITDA or NPV calculation) and simply replace any value within that model that is uncertain with a function that generates random samples from some distribution to reflect its uncertainty. People mistakenly think that the rest of the model’s logic can be left unchanged.

And it really does matter. Incorrect manipulation of uncertain variables in a model will almost always produce simulation results with something close to the correct average value, which people use as a ‘reality check’, but completely wrong spread around that average value. The net effect is usually that decision-makers are presented with a wildly inaccurate estimate of the uncertainty (risk) of the outcomes of different decision choices. Some may realize that the model results are unrealistic and dismiss them, while others won’t and will make misguided decisions.

Mathematics

Most spreadsheets are made up of numbers and variables connected together with addition subtraction, multiplication and division. These operators (+, -, *, /) will not always work well when converting to a model where the values are uncertain:

Addition

Of the four operations, addition is the least problematic. The only issue that occurs is where the two variables being added are connected in some way (i.e. causally associated with each other either directly or indirectly so that their possible values are correlated). In other words, if the two variables you want to add together are affected by one or more of the same external factors then simple addition will very likely not be appropriate. If this is the case, the model should incorporate the inter-relationship using a correlation technique.

That issue aside, performing the simple possible addition of two uncertain variables gives a very nice way of illustrating how non-intuitive the results are from a Monte Carlo simulation – and therefore why it is so dangerous to rely upon one’s intuition when assessing whether the results look about right.

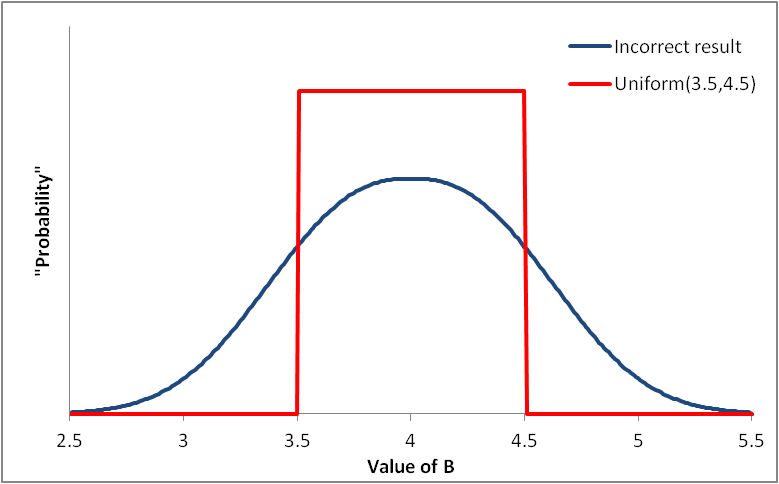

Let’s say that we have two costs – A and B, and we want to calculate the total C. If A = $1, and B = $4, we’d have C = $5. Now imagine that costs A and B are uncertain. A is equally likely to be somewhere between $0.50 and $1.50, and B is equally likely to be between $3.50 and $4.50. That means that C is also uncertain and must lie somewhere between $4 and $6. In fact, C would also most likely take a value of $5, which can be shown pictorially like this:

And in risk analysis modeling parlance like this:

Uniform(0.5,1.5) + Uniform(3.5,4.5) = Triangle(4,5,6)

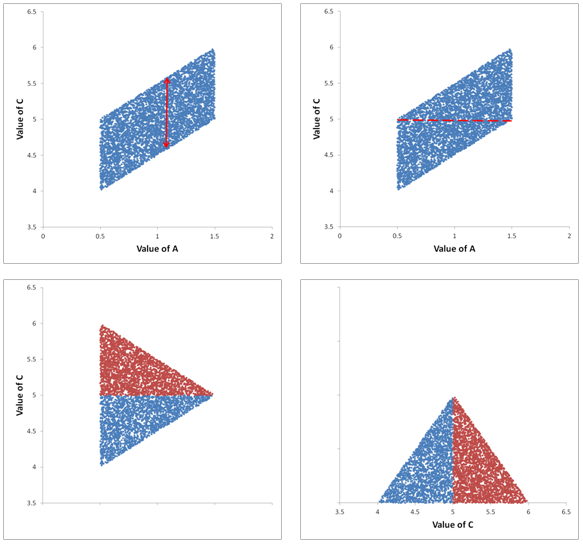

You might be surprised that the uncertainty of C follows a Triangle shape instead of another rectangle (Uniform). When asked to guess what two Uniform variables sum to, the answer often given is another Uniform. It isn’t that intuitive, yet it is hard to think of any simpler distributions we might add together. The following graphs illustrate where the Triangle comes from:

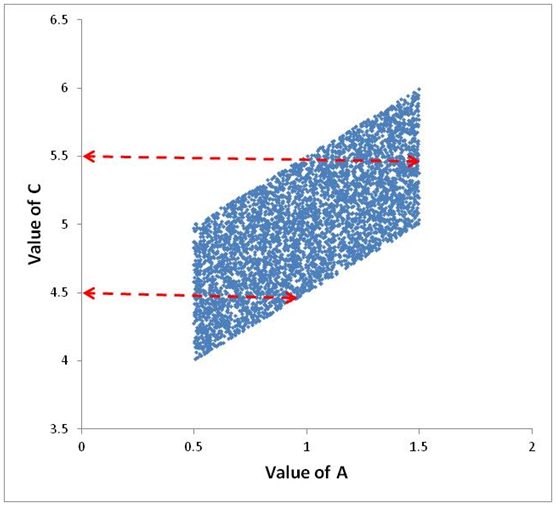

Upper left panel: A plot of Monte Carlo simulated values of A against C. For example, the bottom left corner shows samples where A and B were close to their minima (0.5 and 3.5 respectively to give a total C = 4) and the top right corner shows samples where A and B were close to their maxima (1.5 and 4.5 respectively to give a total C = 6). The range of the value of B is shown by the red arrows.

Upper right panel: The points are statistically evenly spread within his rhombus shape because A and B are uniformly distributed. Imagine that we split these simulated data in two according to the horizontal line shown …

Lower left panel: And then flip the position of the top half of the data. You can see that when projected onto the vertical axis the values of C follow a Triangle distribution

Lower right panel: Switch axes and we have a Triangle distribution. Note that the vertical axis now represents ‘probability since the height of the triangle is proportional to the fraction of simulated values that fall at or near the horizontal axis value.

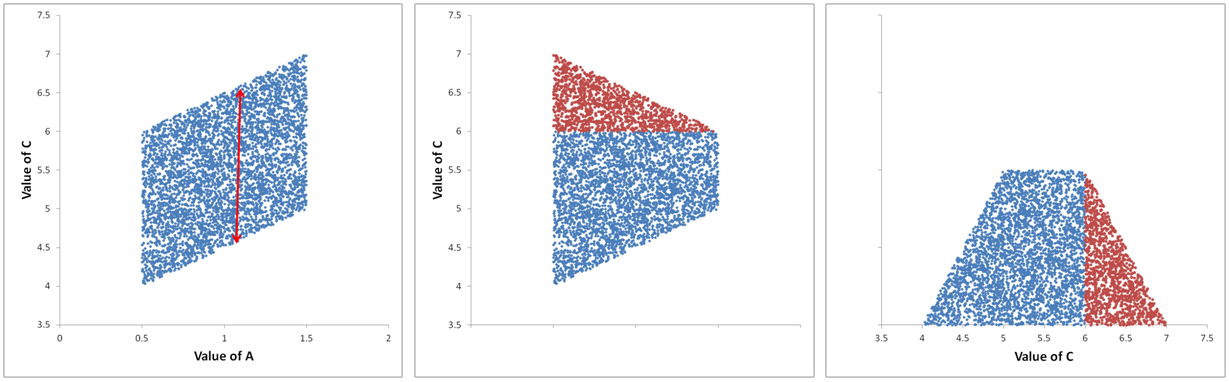

In this example, the distributions sum to a Triangle because A and B follow Uniform distributions with the same width (of $1). If the widths had been different, the resultant summation would have been trapezoidal, as shown by the following similar set of plots, where A = Uniform(0.5,1.5) and B = Uniform(3.5,5.5):

Multiplication

One must first determine whether the two variables are correlated in some way. If so, use a correlation technique.

The next consideration is whether the multiplication is actually a summation. For example, imagine that a business owner expects to have 5000 paying customers visit a shop during a sales period, and each may spend $20. The total sales revenue performed in a deterministic spreadsheet is then 5000 * $20 = $100,000, a short-hand way of adding up 5000 separate lots of $20 ($20 + $20 + ... + $20). Most business spreadsheet models will have many similar types of calculations multiplying number of units by cost or revenue per unit.

But there will obviously be considerable variation in how much each person spends. Let's say that variation can be described by a Lognormal(20,15) distribution, where 20 and 15 are the mean and standard deviation of the value of a random sale. The distribution looks like this, with a 3% chance of being above $60, and a 24% chance of being below 10:

To determine the distribution of total sales revenue we should add up 5000 separate Lognormal(20,15) distributions, since one sale might be very small, another very large. This formula:

= 5000 * VoseLognormal(20,15)

is incorrect, because whatever value is generated by the VoseLognormal function will be applied to all 5000 customers. For example, there is a 3% chance the function will generate a value greater than $60, but the probability that all 5000 people will spend so much is infinitesimally small. The formula would grossly over-estimate the uncertainty of the revenue

The approach is to use an aggregate function. In particular, the VoseAggregateMC (aggregate Monte Carlo) function provides a simple approach:

=VoseAggregateMC(5000,VoseLognormalObject20,15))

This function will sample 5000 times from the Lognormal distribution, add together the 5000 independent value, and return the summation to the spreadsheet. The difference between the two results is shown in the next image. The correct method has a much smaller uncertainty:

Subtraction

Subtraction is to be avoided in a risk model if possible. As a general rule, only subtract constants from a random variable, or subtract a random variable from a constant.

Let’s say we have two costs A and B that sum to C, but we know the values of A and C. If A = $1, and C = $5, we could calculate B: = $5 - $1 = $4. But when the values of A and B are uncertain, we cannot do this calculation at all!

From the example above, we had:

A: Uniform(0.5,1.5)

B: Uniform(3.5,4.5)

C: Triangle(4,5,6)

And we saw that A + B = C, i.e:

Uniform(0.5,1.5) + Uniform(3.5,4.5) = Triangle(4,5,6)

Simple algebra would have us believe that C – A = B, i.e.:

Triangle(4,5,6) - Uniform(0.5,1.5) = Uniform(3.5,4.5)

In fact the left and right sides of this equation are very different. If we calculated in a model

= Triangle(4,5,6) - Uniform(0.5,1.5)

in the hope of retrieving the correct distribution for B, we would in fact have grossly overestimated the uncertainty of B as shown in the following plot:

What went wrong? Let’s look again at the scatter plot of the previous example:

If the value of C were to be 5.5, as shown in the top arrow, the value of A can only lie between 1 and 1.5. Similarly, if C were 4.5, A must lie between 0.5 and 1. In other words, the possible distribution of A is dependent on the value of C, which is not accounted for in a simple formula like:

C – A = Triangle(4,5,6) - Uniform(3.5,4.5)

The general rule here is that one should avoid doing subtractions like C – A when using Monte Carlo simulation whenever the value of C incorporates the value of A. So, for example:

-

C = total cost of running a factory, A = personnel cost, C-A will not calculate the non-personnel cost. Instead, you should construct your model the other way round - calculate the personnel costs and non-personnel costs, then add them together to obtain the total cost

-

C = revenue, A = cost, then C-A will calculate the profit, as long as any relationship between C and A has been accounted for in the model (e.g. relationship to the volume of goods sold).

Division

Nearly everyone who starts doing some risk modeling makes mistakes when they include division in their models. It is very confusing and unintuitive to begin with, so avoid using division in your models unless you have had some really good risk analysis training.

We can illustrate the problem using the example above for multiplication. From Central Limit Theorem we could have predicted that the total shop earnings would approximately follow a Normal(10000, 1061) distribution. The following plot compares the two:

Imagine that we have this CLT estimate, and want to figure out how much each person spends. We might write this:

Normal(12000, 1061) / 5000

This is the average amount that each person spends in the shop, but it could also be the actual amount each individual person spends if they all spent the same amount. There is no distinction between the two in this calculation. However, if each customer spends an amount that is different and independent of other customers, there is no way to back-calculate the distribution of the individual expenditure (which was a Lognormal(20,15) you’ll remember). We cannot know with the above information what the distribution of the amount spent by individual customers is, but it turns out that we can state the mean and standard deviation if they all make purchasing decisions independently.

Looking at the problem the other way round, we might want to know how many individuals following a certain distribution would be needed to have a certain total. For example, imagine that we have a sales target of $50,000, and a random sale follows a Lognormal(20,15) distribution. This formula would be incorrect:

=50000 / VoseLognormal(20,15)

because it would be assuming all sales were the same amount. Instead, we should use this ModelRisk formula:

=VoseStopSum(VoseLognormalObject(20,15),50000)

The VoseStopSum function is essentially the reverse of the VoseAggregateMC function. Again, the results using the correct method are far narrower than the incorrect method:

Representing an uncertain variable more than once in the model

When we develop a large spreadsheet model, perhaps with several linked sheets in the same file, it is often convenient to have some parameter values that are used in several sheets appear in each of those sheets. This makes writing formulae and tracing back precedents in a formula quicker. Even in a deterministic model (i.e. a model where there are only best guess values, not distributions) it is important that there is only one place in the model where the parameter value can be changed (at Vose Software we use the convention that all changeable input parameter values or distributions are labelled blue, as you will see in the example models). There are two reasons: first it is easier to update the model with new parameter values; and second it avoids the potential mistake of only changing the parameter values in some of the Cells in which it appears, forgetting the others, and thereby having a model that is internally inconsistent. For example, a model could have a parameter 'Cargo (mt)' in Sheet 1 with a value of 10,000 and a value of 12,000 in Sheet 2.

It becomes even more important to maintain this discipline when we create a Monte Carlo model if that parameter is modelled with a distribution. Although each Cell in the model might carry the same probability distribution, left unchecked each distribution will generate different values for the parameter in the same iteration, thus rendering the generated scenario impossible.

How to allow multiple occurrences of the same uncertain parameter

If it really is important to you to have the probability distribution formula in each Cell where the parameter is featured (perhaps because you wish to see what distribution equation was used without having to switch to the source sheet, we suggest that you make use of the U parameter in ModelRisk's simulation functions to ensure that the same value is being generated in each place:

Cell A1: =VoseNormal(100,10,Random1)

Cell A2: =VoseNormal(100,10,Random1)

where Random1 is some Uniform(0,1) distribution will generate the same values in each cell.

More disguised versions of the same problem

The error described so far is where the formula for the distribution of a random variable is featured in more than one Cell of a spreadsheet model. These errors are quite easy to spot. Another form of the same error is where two or more distributions incorporate the same random variable in some way. For example, consider the following problem:

A company is considering restructuring its operations with the inevitable layoffs, and wishes to analyze how much it would save in the process. Looking at just the office space component, a consultant estimates that if the company were to make the maximum number of redundancies and outsource some of its operations, it would save $PERT(1.1, 1.3, 1.6)M of office space costs. On the other hand, just making the redundancies in the accounting section and outsourcing that activity, it could save $PERT(0.4, 0.5, 0.9)M of office space costs.

It would be quite natural, at first sight, to put these two distributions into a model, and run a simulation to determine the savings for the two redundancy options. On their own, each cost saving distribution would be valid. We might also decide to calculate in a spreadsheet Cell the difference between the two savings, and here we would potentially be making a big mistake. Why? Well, what if there is an uncertain component that is common to both office cost savings? For example, if inside these cost distributions is the cost of getting out of a current lease contract, uncertain because negotiations would need to take place. The problem is that by sampling from these two distributions independently, we are not recognizing the common element, which is a problem if that common element is not a fixed value, because it induces some level of correlation.

Calculating means instead of simulating variation

When we first start thinking about risk, it is quite natural to want to convert the impact of a risk to a single number. For example, we might consider that there is a 20% chance of losing a contract, which would result in a loss of income of $100,000. Put together, a person might reason that to be a risk of some $20,000 (ie 20% * $100,000). This $20,000 figure is known as the 'expected value' of the variable. It is the probability weighted average of all possible outcomes. So, the two outcomes are $100,000 with 20% probability and $0 with 80% probability:

Mean risk (expected value) = 0.2*$100,000 + 0.8*$0 = 20,000

The graph below shows the probability distribution for this risk and the position of the expected value.

Distribution for risk with 20% probability and $100,000 impact.

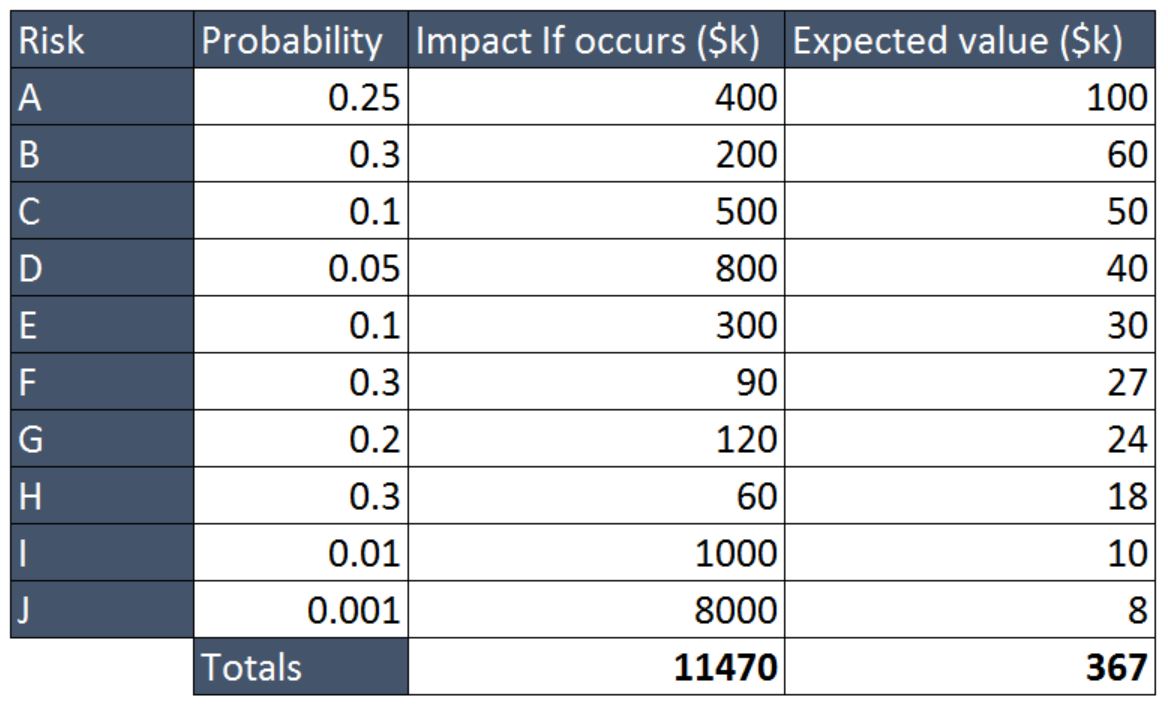

Calculating the expected values of risks might also seem a reasonable and simple method to compare risks. For example, in the following table, risks A to J are ranked in descending order of expected cost:

If a loss of $500k or more would ruin your company, you may well rank the risks differently - for impacts exceeding $500k, it is the probability of occurrence that is more important: so risks C, D, I and to a lesser extent, J pose a survival threat on your company. Note also that you may value risk C as no more severe than risk D because if either of them occur your company has gone bust.

On the other hand, if risk A occurs, giving you a loss of $400k, you are precariously close to ruin: it would just take any of the risks except F and H to occur (unless they both occurred) and you've gone bust.

Looking at the sum of the expected values gives you no appreciation

of how close you are to ruin. How would you calculate the probability

of ruin? The model solution can be found in this ![]() example model. Figure 2 plots the distribution

of possible outcomes for this set of risks.

example model. Figure 2 plots the distribution

of possible outcomes for this set of risks.

Probability distribution of total impact from risks A to J

Why calculating the expected value is wrong

From a risk analysis point of view, by representing the impact of a risk by its expected value we have removed the uncertainty (i.e. we can't see the breadth of different outcomes), which is a fundamental reason for doing risk analysis in the first place. That said, you might think that people running Monte Carlo simulations would be more attuned to describing risks with distributions rather than single values but this is nonetheless one of the most common errors.

Another, slightly more disguised example of the same error is when the impact is uncertain. For example, let's imagine that there will be an election this year and that two parties are running: the Socialist Democrats Party and the Democratic Socialists Party. The SDP are currently in power and have vowed to keep the corporate tax rate at 17% if they win the election. Political analysts reckon they have about a 65% chance of staying in power. The DSP promise to lower the corporate tax rate by one to four percent, most probably 3%. We might chose to express next year's corporate tax rate as:

Rate = 0.35*VosePERT(13%,14%,16%) + 0.65*17%

Checking the formula by simulating we'd get a probability distribution

which could give us some comfort that we've assigned uncertainty properly

to this parameter. However, a correct model would have drawn a value of

17% with probability 0.65 and a random value from the PERT distribution

with probability 0.35. How could you construct that model? This ![]() link gives a couple of examples. The

correct model would have considerably greater spread: the two results

are compared below:

link gives a couple of examples. The

correct model would have considerably greater spread: the two results

are compared below:

Comparison of correct and incorrect modeling of corporate tax rate for the SDP/DSP example.

Navigation

- Risk management

- Risk management introduction

- What are risks and opportunities?

- Planning a risk analysis

- Clearly stating risk management questions

- Evaluating risk management options

- Introduction to risk analysis

- The quality of a risk analysis

- Using risk analysis to make better decisions

- Explaining a models assumptions

- Statistical descriptions of model outputs

- Simulation Statistical Results

- Preparing a risk analysis report

- Graphical descriptions of model outputs

- Presenting and using results introduction

- Statistical descriptions of model results

- Mean deviation (MD)

- Range

- Semi-variance and semi-standard deviation

- Kurtosis (K)

- Mean

- Skewness (S)

- Conditional mean

- Custom simulation statistics table

- Mode

- Cumulative percentiles

- Median

- Relative positioning of mode median and mean

- Variance

- Standard deviation

- Inter-percentile range

- Normalized measures of spread - the CofV

- Graphical descriptionss of model results

- Showing probability ranges

- Overlaying histogram plots

- Scatter plots

- Effect of varying number of bars

- Sturges rule

- Relationship between cdf and density (histogram) plots

- Difficulty of interpreting the vertical scale

- Stochastic dominance tests

- Risk-return plots

- Second order cumulative probability plot

- Ascending and descending cumulative plots

- Tornado plot

- Box Plot

- Cumulative distribution function (cdf)

- Probability density function (pdf)

- Crude sensitivity analysis for identifying important input distributions

- Pareto Plot

- Trend plot

- Probability mass function (pmf)

- Overlaying cdf plots

- Cumulative Plot

- Simulation data table

- Statistics table

- Histogram Plot

- Spider plot

- Determining the width of histogram bars

- Plotting a variable with discrete and continuous elements

- Smoothing a histogram plot

- Risk analysis modeling techniques

- Monte Carlo simulation

- Monte Carlo simulation introduction

- Monte Carlo simulation in ModelRisk

- Filtering simulation results

- Output/Input Window

- Simulation Progress control

- Running multiple simulations

- Random number generation in ModelRisk

- Random sampling from input distributions

- How many Monte Carlo samples are enough?

- Probability distributions

- Distributions introduction

- Probability calculations in ModelRisk

- Selecting the appropriate distributions for your model

- List of distributions by category

- Distribution functions and the U parameter

- Univariate continuous distributions

- Beta distribution

- Beta Subjective distribution

- Four-parameter Beta distribution

- Bradford distribution

- Burr distribution

- Cauchy distribution

- Chi distribution

- Chi Squared distribution

- Continuous distributions introduction

- Continuous fitted distribution

- Cumulative ascending distribution

- Cumulative descending distribution

- Dagum distribution

- Erlang distribution

- Error distribution

- Error function distribution

- Exponential distribution

- Exponential family of distributions

- Extreme Value Minimum distribution

- Extreme Value Maximum distribution

- F distribution

- Fatigue Life distribution

- Gamma distribution

- Generalized Extreme Value distribution

- Generalized Logistic distribution

- Generalized Trapezoid Uniform (GTU) distribution

- Histogram distribution

- Hyperbolic-Secant distribution

- Inverse Gaussian distribution

- Johnson Bounded distribution

- Johnson Unbounded distribution

- Kernel Continuous Unbounded distribution

- Kumaraswamy distribution

- Kumaraswamy Four-parameter distribution

- Laplace distribution

- Levy distribution

- Lifetime Two-Parameter distribution

- Lifetime Three-Parameter distribution

- Lifetime Exponential distribution

- LogGamma distribution

- Logistic distribution

- LogLaplace distribution

- LogLogistic distribution

- LogLogistic Alternative parameter distribution

- LogNormal distribution

- LogNormal Alternative-parameter distribution

- LogNormal base B distribution

- LogNormal base E distribution

- LogTriangle distribution

- LogUniform distribution

- Noncentral Chi squared distribution

- Noncentral F distribution

- Normal distribution

- Normal distribution with alternative parameters

- Maxwell distribution

- Normal Mix distribution

- Relative distribution

- Ogive distribution

- Pareto (first kind) distribution

- Pareto (second kind) distribution

- Pearson Type 5 distribution

- Pearson Type 6 distribution

- Modified PERT distribution

- PERT distribution

- PERT Alternative-parameter distribution

- Reciprocal distribution

- Rayleigh distribution

- Skew Normal distribution

- Slash distribution

- SplitTriangle distribution

- Student-t distribution

- Three-parameter Student distribution

- Triangle distribution

- Triangle Alternative-parameter distribution

- Uniform distribution

- Weibull distribution

- Weibull Alternative-parameter distribution

- Three-Parameter Weibull distribution

- Univariate discrete distributions

- Discrete distributions introduction

- Bernoulli distribution

- Beta-Binomial distribution

- Beta-Geometric distribution

- Beta-Negative Binomial distribution

- Binomial distribution

- Burnt Finger Poisson distribution

- Delaporte distribution

- Discrete distribution

- Discrete Fitted distribution

- Discrete Uniform distribution

- Geometric distribution

- HypergeoM distribution

- Hypergeometric distribution

- HypergeoD distribution

- Inverse Hypergeometric distribution

- Logarithmic distribution

- Negative Binomial distribution

- Poisson distribution

- Poisson Uniform distribution

- Polya distribution

- Skellam distribution

- Step Uniform distribution

- Zero-modified counting distributions

- More on probability distributions

- Multivariate distributions

- Multivariate distributions introduction

- Dirichlet distribution

- Multinomial distribution

- Multivariate Hypergeometric distribution

- Multivariate Inverse Hypergeometric distribution type2

- Negative Multinomial distribution type 1

- Negative Multinomial distribution type 2

- Multivariate Inverse Hypergeometric distribution type1

- Multivariate Normal distribution

- More on probability distributions

- Approximating one distribution with another

- Approximations to the Inverse Hypergeometric Distribution

- Normal approximation to the Gamma Distribution

- Normal approximation to the Poisson Distribution

- Approximations to the Hypergeometric Distribution

- Stirlings formula for factorials

- Normal approximation to the Beta Distribution

- Approximation of one distribution with another

- Approximations to the Negative Binomial Distribution

- Normal approximation to the Student-t Distribution

- Approximations to the Binomial Distribution

- Normal_approximation_to_the_Binomial_distribution

- Poisson_approximation_to_the_Binomial_distribution

- Normal approximation to the Chi Squared Distribution

- Recursive formulas for discrete distributions

- Normal approximation to the Lognormal Distribution

- Normal approximations to other distributions

- Approximating one distribution with another

- Correlation modeling in risk analysis

- Common mistakes when adapting spreadsheet models for risk analysis

- More advanced risk analysis methods

- SIDs

- Modeling with objects

- ModelRisk database connectivity functions

- PK/PD modeling

- Value of information techniques

- Simulating with ordinary differential equations (ODEs)

- Optimization of stochastic models

- ModelRisk optimization extension introduction

- Optimization Settings

- Defining Simulation Requirements in an Optimization Model

- Defining Decision Constraints in an Optimization Model

- Optimization Progress control

- Defining Targets in an Optimization Model

- Defining Decision Variables in an Optimization Model

- Optimization Results

- Summing random variables

- Aggregate distributions introduction

- Aggregate modeling - Panjer's recursive method

- Adding correlation in aggregate calculations

- Sum of a random number of random variables

- Moments of an aggregate distribution

- Aggregate modeling in ModelRisk

- Aggregate modeling - Fast Fourier Transform (FFT) method

- How many random variables add up to a fixed total

- Aggregate modeling - compound Poisson approximation

- Aggregate modeling - De Pril's recursive method

- Testing and modeling causal relationships

- Stochastic time series

- Time series introduction

- Time series in ModelRisk

- Autoregressive models

- Thiel inequality coefficient

- Effect of an intervention at some uncertain point in time

- Log return of a Time Series

- Markov Chain models

- Seasonal time series

- Bounded random walk

- Time series modeling in finance

- Birth and death models

- Time series models with leading indicators

- Geometric Brownian Motion models

- Time series projection of events occurring randomly in time

- Simulation for six sigma

- ModelRisk's Six Sigma functions

- VoseSixSigmaCp

- VoseSixSigmaCpkLower

- VoseSixSigmaProbDefectShift

- VoseSixSigmaLowerBound

- VoseSixSigmaK

- VoseSixSigmaDefectShiftPPMUpper

- VoseSixSigmaDefectShiftPPMLower

- VoseSixSigmaDefectShiftPPM

- VoseSixSigmaCpm

- VoseSixSigmaSigmaLevel

- VoseSixSigmaCpkUpper

- VoseSixSigmaCpk

- VoseSixSigmaDefectPPM

- VoseSixSigmaProbDefectShiftLower

- VoseSixSigmaProbDefectShiftUpper

- VoseSixSigmaYield

- VoseSixSigmaUpperBound

- VoseSixSigmaZupper

- VoseSixSigmaZmin

- VoseSixSigmaZlower

- Modeling expert opinion

- Modeling expert opinion introduction

- Sources of error in subjective estimation

- Disaggregation

- Distributions used in modeling expert opinion

- A subjective estimate of a discrete quantity

- Incorporating differences in expert opinions

- Modeling opinion of a variable that covers several orders of magnitude

- Maximum entropy

- Probability theory and statistics

- Probability theory and statistics introduction

- Stochastic processes

- Stochastic processes introduction

- Poisson process

- Hypergeometric process

- The hypergeometric process

- Number in a sample with a particular characteristic in a hypergeometric process

- Number of hypergeometric samples to get a specific number of successes

- Number of samples taken to have an observed s in a hypergeometric process

- Estimate of population and sub-population sizes in a hypergeometric process

- The binomial process

- Renewal processes

- Mixture processes

- Martingales

- Estimating model parameters from data

- The basics

- Probability equations

- Probability theorems and useful concepts

- Probability parameters

- Probability rules and diagrams

- The definition of probability

- The basics of probability theory introduction

- Fitting probability models to data

- Fitting time series models to data

- Fitting correlation structures to data

- Fitting in ModelRisk

- Fitting probability distributions to data

- Fitting distributions to data

- Method of Moments (MoM)

- Check the quality of your data

- Kolmogorov-Smirnoff (K-S) Statistic

- Anderson-Darling (A-D) Statistic

- Goodness of fit statistics

- The Chi-Squared Goodness-of-Fit Statistic

- Determining the joint uncertainty distribution for parameters of a distribution

- Using Method of Moments with the Bootstrap

- Maximum Likelihood Estimates (MLEs)

- Fitting a distribution to truncated censored or binned data

- Critical Values and Confidence Intervals for Goodness-of-Fit Statistics

- Matching the properties of the variable and distribution

- Transforming discrete data before performing a parametric distribution fit

- Does a parametric distribution exist that is well known to fit this type of variable?

- Censored data

- Fitting a continuous non-parametric second-order distribution to data

- Goodness of Fit Plots

- Fitting a second order Normal distribution to data

- Using Goodness-of Fit Statistics to optimize Distribution Fitting

- Information criteria - SIC HQIC and AIC

- Fitting a second order parametric distribution to observed data

- Fitting a distribution for a continuous variable

- Does the random variable follow a stochastic process with a well-known model?

- Fitting a distribution for a discrete variable

- Fitting a discrete non-parametric second-order distribution to data

- Fitting a continuous non-parametric first-order distribution to data

- Fitting a first order parametric distribution to observed data

- Fitting a discrete non-parametric first-order distribution to data

- Fitting distributions to data

- Technical subjects

- Comparison of Classical and Bayesian methods

- Comparison of classic and Bayesian estimate of Normal distribution parameters

- Comparison of classic and Bayesian estimate of intensity lambda in a Poisson process

- Comparison of classic and Bayesian estimate of probability p in a binomial process

- Which technique should you use?

- Comparison of classic and Bayesian estimate of mean "time" beta in a Poisson process

- Classical statistics

- Bayesian

- Bootstrap

- The Bootstrap

- Linear regression parametric Bootstrap

- The Jackknife

- Multiple variables Bootstrap Example 2: Difference between two population means

- Linear regression non-parametric Bootstrap

- The parametric Bootstrap

- Bootstrap estimate of prevalence

- Estimating parameters for multiple variables

- Example: Parametric Bootstrap estimate of the mean of a Normal distribution with known standard deviation

- The non-parametric Bootstrap

- Example: Parametric Bootstrap estimate of mean number of calls per hour at a telephone exchange

- The Bootstrap likelihood function for Bayesian inference

- Multiple variables Bootstrap Example 1: Estimate of regression parameters

- Bayesian inference

- Uninformed priors

- Conjugate priors

- Prior distributions

- Bayesian analysis with threshold data

- Bayesian analysis example: gender of a random sample of people

- Informed prior

- Simulating a Bayesian inference calculation

- Hyperparameters

- Hyperparameter example: Micro-fractures on turbine blades

- Constructing a Bayesian inference posterior distribution in Excel

- Bayesian analysis example: Tigers in the jungle

- Markov chain Monte Carlo (MCMC) simulation

- Introduction to Bayesian inference concepts

- Bayesian estimate of the mean of a Normal distribution with known standard deviation

- Bayesian estimate of the mean of a Normal distribution with unknown standard deviation

- Determining prior distributions for correlated parameters

- Improper priors

- The Jacobian transformation

- Subjective prior based on data

- Taylor series approximation to a Bayesian posterior distribution

- Bayesian analysis example: The Monty Hall problem

- Determining prior distributions for uncorrelated parameters

- Subjective priors

- Normal approximation to the Beta posterior distribution

- Bayesian analysis example: identifying a weighted coin

- Bayesian estimate of the standard deviation of a Normal distribution with known mean

- Likelihood functions

- Bayesian estimate of the standard deviation of a Normal distribution with unknown mean

- Determining a prior distribution for a single parameter estimate

- Simulating from a constructed posterior distribution

- Bootstrap

- Comparison of Classical and Bayesian methods

- Analyzing and using data introduction

- Data Object

- Vose probability calculation

- Bayesian model averaging

- Miscellaneous

- Excel and ModelRisk model design and validation techniques

- Using range names for model clarity

- Color coding models for clarity

- Compare with known answers

- Checking units propagate correctly

- Stressing parameter values

- Model Validation and behavior introduction

- Informal auditing

- Analyzing outputs

- View random scenarios on screen and check for credibility

- Split up complex formulas (megaformulas)

- Building models that are efficient

- Comparing predictions against reality

- Numerical integration

- Comparing results of alternative models

- Building models that are easy to check and modify

- Model errors

- Model design introduction

- About array functions in Excel

- Excel and ModelRisk model design and validation techniques

- Monte Carlo simulation

- RISK ANALYSIS SOFTWARE

- Risk analysis software from Vose Software

- ModelRisk - risk modeling in Excel

- ModelRisk functions explained

- VoseCopulaOptimalFit and related functions

- VoseTimeOptimalFit and related functions

- VoseOptimalFit and related functions

- VoseXBounds

- VoseCLTSum

- VoseAggregateMoments

- VoseRawMoments

- VoseSkewness

- VoseMoments

- VoseKurtosis

- VoseAggregatePanjer

- VoseAggregateFFT

- VoseCombined

- VoseCopulaBiGumbel

- VoseCopulaBiClayton

- VoseCopulaBiNormal

- VoseCopulaBiT

- VoseKendallsTau

- VoseRiskEvent

- VoseCopulaBiFrank

- VoseCorrMatrix

- VoseRank

- VoseValidCorrmat

- VoseSpearman

- VoseCopulaData

- VoseCorrMatrixU

- VoseTimeSeasonalGBM

- VoseMarkovSample

- VoseMarkovMatrix

- VoseThielU

- VoseTimeEGARCH

- VoseTimeAPARCH

- VoseTimeARMA

- VoseTimeDeath

- VoseTimeAR1

- VoseTimeAR2

- VoseTimeARCH

- VoseTimeMA2

- VoseTimeGARCH

- VoseTimeGBMJDMR

- VoseTimePriceInflation

- VoseTimeGBMMR

- VoseTimeWageInflation

- VoseTimeLongTermInterestRate

- VoseTimeMA1

- VoseTimeGBM

- VoseTimeGBMJD

- VoseTimeShareYields

- VoseTimeYule

- VoseTimeShortTermInterestRate

- VoseDominance

- VoseLargest

- VoseSmallest

- VoseShift

- VoseStopSum

- VoseEigenValues

- VosePrincipleEsscher

- VoseAggregateMultiFFT

- VosePrincipleEV

- VoseCopulaMultiNormal

- VoseRunoff

- VosePrincipleRA

- VoseSumProduct

- VosePrincipleStdev

- VosePoissonLambda

- VoseBinomialP

- VosePBounds

- VoseAIC

- VoseHQIC

- VoseSIC

- VoseOgive1

- VoseFrequency

- VoseOgive2

- VoseNBootStdev

- VoseNBoot

- VoseSimulate

- VoseNBootPaired

- VoseAggregateMC

- VoseMean

- VoseStDev

- VoseAggregateMultiMoments

- VoseDeduct

- VoseExpression

- VoseLargestSet

- VoseKthSmallest

- VoseSmallestSet

- VoseKthLargest

- VoseNBootCofV

- VoseNBootPercentile

- VoseExtremeRange

- VoseNBootKurt

- VoseCopulaMultiClayton

- VoseNBootMean

- VoseTangentPortfolio

- VoseNBootVariance

- VoseNBootSkewness

- VoseIntegrate

- VoseInterpolate

- VoseCopulaMultiGumbel

- VoseCopulaMultiT

- VoseAggregateMultiMC

- VoseCopulaMultiFrank

- VoseTimeMultiMA1

- VoseTimeMultiMA2

- VoseTimeMultiGBM

- VoseTimeMultBEKK

- VoseAggregateDePril

- VoseTimeMultiAR1

- VoseTimeWilkie

- VoseTimeDividends

- VoseTimeMultiAR2

- VoseRuinFlag

- VoseRuinTime

- VoseDepletionShortfall

- VoseDepletion

- VoseDepletionFlag

- VoseDepletionTime

- VosejProduct

- VoseCholesky

- VoseTimeSimulate

- VoseNBootSeries

- VosejkProduct

- VoseRuinSeverity

- VoseRuin

- VosejkSum

- VoseTimeDividendsA

- VoseRuinNPV

- VoseTruncData

- VoseSample

- VoseIdentity

- VoseCopulaSimulate

- VoseSortA

- VoseFrequencyCumulA

- VoseAggregateDeduct

- VoseMeanExcessP

- VoseProb10

- VoseSpearmanU

- VoseSortD

- VoseFrequencyCumulD

- VoseRuinMaxSeverity

- VoseMeanExcessX

- VoseRawMoment3

- VosejSum

- VoseRawMoment4

- VoseNBootMoments

- VoseVariance

- VoseTimeShortTermInterestRateA

- VoseTimeLongTermInterestRateA

- VoseProb

- VoseDescription

- VoseCofV

- VoseAggregateProduct

- VoseEigenVectors

- VoseTimeWageInflationA

- VoseRawMoment1

- VosejSumInf

- VoseRawMoment2

- VoseShuffle

- VoseRollingStats

- VoseSplice

- VoseTSEmpiricalFit

- VoseTimeShareYieldsA

- VoseParameters

- VoseAggregateTranche

- VoseCovToCorr

- VoseCorrToCov

- VoseLLH

- VoseTimeSMEThreePoint

- VoseDataObject

- VoseCopulaDataSeries

- VoseDataRow

- VoseDataMin

- VoseDataMax

- VoseTimeSME2Perc

- VoseTimeSMEUniform

- VoseTimeSMESaturation

- VoseOutput

- VoseInput

- VoseTimeSMEPoisson

- VoseTimeBMAObject

- VoseBMAObject

- VoseBMAProb10

- VoseBMAProb

- VoseCopulaBMA

- VoseCopulaBMAObject

- VoseTimeEmpiricalFit

- VoseTimeBMA

- VoseBMA

- VoseSimKurtosis

- VoseOptConstraintMin

- VoseSimProbability

- VoseCurrentSample

- VoseCurrentSim

- VoseLibAssumption

- VoseLibReference

- VoseSimMoments

- VoseOptConstraintMax

- VoseSimMean

- VoseOptDecisionContinuous

- VoseOptRequirementEquals

- VoseOptRequirementMax

- VoseOptRequirementMin

- VoseOptTargetMinimize

- VoseOptConstraintEquals

- VoseSimVariance

- VoseSimSkewness

- VoseSimTable

- VoseSimCofV

- VoseSimPercentile

- VoseSimStDev

- VoseOptTargetValue

- VoseOptTargetMaximize

- VoseOptDecisionDiscrete

- VoseSimMSE

- VoseMin

- VoseMin

- VoseOptDecisionList

- VoseOptDecisionBoolean

- VoseOptRequirementBetween

- VoseOptConstraintBetween

- VoseSimMax

- VoseSimSemiVariance

- VoseSimSemiStdev

- VoseSimMeanDeviation

- VoseSimMin

- VoseSimCVARp

- VoseSimCVARx

- VoseSimCorrelation

- VoseSimCorrelationMatrix

- VoseOptConstraintString

- VoseOptCVARx

- VoseOptCVARp

- VoseOptPercentile

- VoseSimValue

- VoseSimStop

- Precision Control Functions

- VoseAggregateDiscrete

- VoseTimeMultiGARCH

- VoseTimeGBMVR

- VoseTimeGBMAJ

- VoseTimeGBMAJVR

- VoseSID

- Generalized Pareto Distribution (GPD)

- Generalized Pareto Distribution (GPD) Equations

- Three-Point Estimate Distribution

- Three-Point Estimate Distribution Equations

- VoseCalibrate

- ModelRisk interfaces

- Integrate

- Data Viewer

- Stochastic Dominance

- Library

- Correlation Matrix

- Portfolio Optimization Model

- Common elements of ModelRisk interfaces

- Risk Event

- Extreme Values

- Select Distribution

- Combined Distribution

- Aggregate Panjer

- Interpolate

- View Function

- Find Function

- Deduct

- Ogive

- AtRISK model converter

- Aggregate Multi FFT

- Stop Sum

- Crystal Ball model converter

- Aggregate Monte Carlo

- Splicing Distributions

- Subject Matter Expert (SME) Time Series Forecasts

- Aggregate Multivariate Monte Carlo

- Ordinary Differential Equation tool

- Aggregate FFT

- More on Conversion

- Multivariate Copula

- Bivariate Copula

- Univariate Time Series

- Modeling expert opinion in ModelRisk

- Multivariate Time Series

- Sum Product

- Aggregate DePril

- Aggregate Discrete

- Expert

- ModelRisk introduction

- Building and running a simple example model

- Distributions in ModelRisk

- List of all ModelRisk functions

- Custom applications and macros

- ModelRisk functions explained

- Tamara - project risk analysis

- Introduction to Tamara project risk analysis software

- Launching Tamara

- Importing a schedule

- Assigning uncertainty to the amount of work in the project

- Assigning uncertainty to productivity levels in the project

- Adding risk events to the project schedule

- Adding cost uncertainty to the project schedule

- Saving the Tamara model

- Running a Monte Carlo simulation in Tamara

- Reviewing the simulation results in Tamara

- Using Tamara results for cost and financial risk analysis

- Creating, updating and distributing a Tamara report

- Tips for creating a schedule model suitable for Monte Carlo simulation

- Random number generator and sampling algorithms used in Tamara

- Probability distributions used in Tamara

- Correlation with project schedule risk analysis

- Pelican - enterprise risk management